When serverless first gained mainstream attention, it was framed almost exclusively as a way to reduce infrastructure spend. Messages focused on eliminating idle servers, paying only for execution time, and avoiding overprovisioning. While these benefits are real, they distract from what actually makes serverless a transformative architectural shift.

The central value of serverless is not cost efficiency. It is operational speed.

Serverless architectures fundamentally change how teams design systems, deploy changes, handle failures, and respond to growth. They reduce the structural drag that slows organizations down, not by optimizing engineering effort, but by removing entire categories of work from the system. What’s left is not just simpler infrastructure—it’s faster decision-making, faster delivery, and faster learning.

In competitive markets, speed is no longer about writing code more quickly. It’s about how rapidly a team can move from idea to production without destabilizing the product, the team, or the business. Serverless shifts that balance in ways traditional infrastructure never could.

Speed Is Determined by Architecture, Not Effort

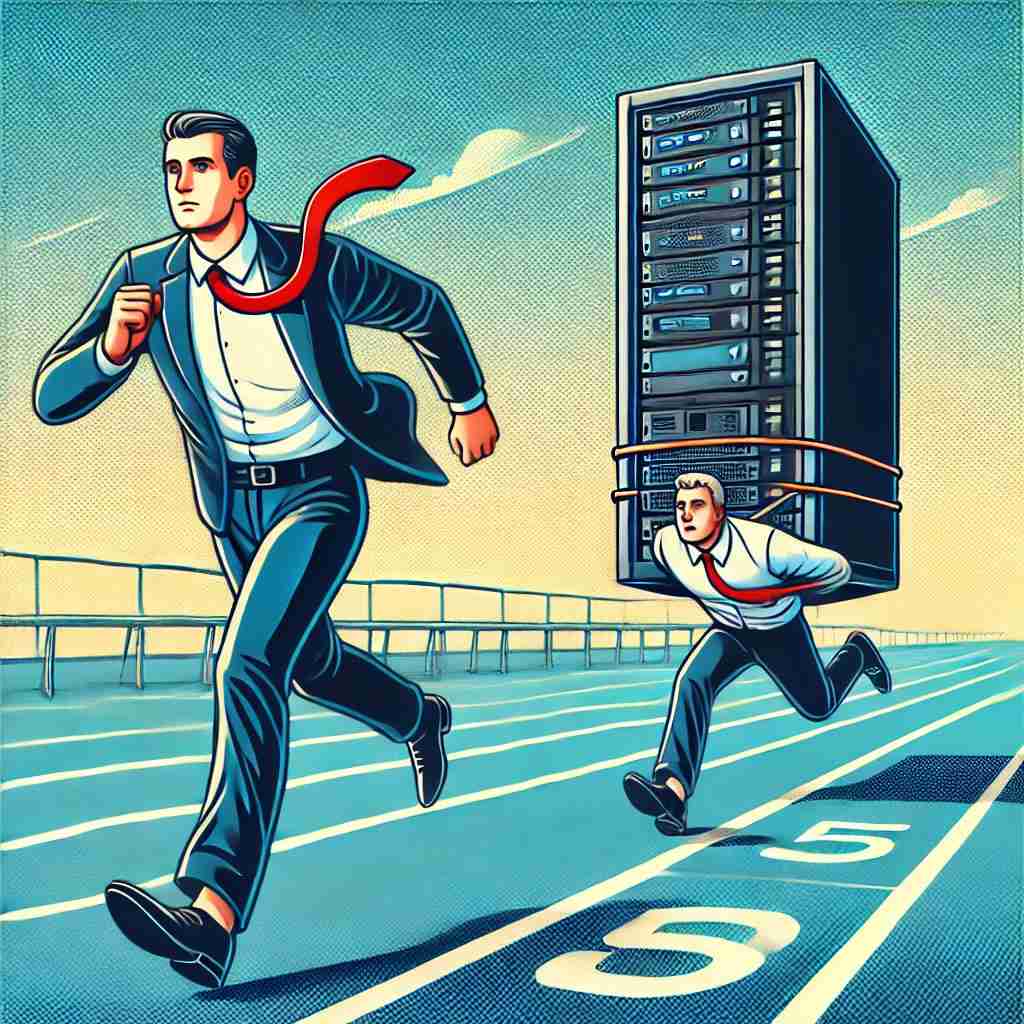

Many teams try to move faster by working harder. They hire better engineers, compress timelines, and lean on process improvements in the hope that productivity will increase. But velocity is not something that can be brute-forced. It is the result of system design.

If every deployment requires coordination across multiple teams, speed is constrained by communication overhead. If infrastructure changes require planning sessions and approvals, speed is constrained by governance. If scaling involves provisioning cycles, hardware limits, or capacity forecasts, speed is constrained by operations.

Serverless architectures remove those constraints, not by making teams more disciplined, but by eliminating the processes that enforce delay in the first place. When compute capacity no longer needs to be provisioned, discussions about provisioning disappear. When infrastructure management is abstracted away, meetings about maintenance disappear. When deployment becomes routine and low-risk, release cycles shorten naturally.

Speed does not come from pressure. It comes from removing friction.

AWS Lambda and the Redefinition of Application Design

AWS Lambda is often described as a way to run code without managing servers. This description is technically correct but conceptually misleading. Lambda does not merely replace virtual machines with a different billing model. It encourages a fundamentally different way of designing systems.

Traditional applications are long-running processes that must be deployed, configured, scaled, monitored, and kept alive. Serverless functions invert that model. Execution becomes ephemeral. Scaling is automatic. Failures are isolated by default. Infrastructure becomes reactive instead of persistent.

This changes how architectures evolve. Systems stop being built as centralized applications and begin to emerge as collections of small, purpose-built functions connected by events. Each function does one thing, executes briefly, and then disappears. There is no process to keep alive and no environment to maintain.

More importantly, this changes how engineers think. Instead of designing applications, they design behavior. Instead of managing execution environments, they define workflows. Instead of worrying about uptime, they structure resilience.

The result is not just a different technical stack. It’s a different mental model for building software.

Event-Driven Systems Create Flow Instead of Bottlenecks

Serverless design naturally leads to event-driven architecture. Rather than building linear flows where one system invokes another directly, systems respond to events as they occur. Uploading a file generates an event. Completing a payment generates an event. Failing to process a message generates an event.

This design eliminates blocking dependencies. Components do not wait for each other. They react independently.

In practice, this removes rotational delays from systems. There are no tightly coupled pipelines where one failure halts the entire process. Instead, failures are localized, retries are automated, and alternate paths remain operational.

Event-driven systems also support incremental evolution. A new feature can subscribe to an existing event without modifying the original producer. A workflow can be extended without rewriting core logic. Behavior can change without redeployment of unrelated components.

This is how modern systems stay adaptable under continuous change. Not through monolithic redesigns, but through additive evolution.

Managed Services Reduce Risk, Not Responsibility

One of the most common objections to serverless and managed services is the fear of “losing control.” Engineers accustomed to managing infrastructure themselves often worry that abstraction hides complexity that could later become a liability. In reality, this mindset confuses control with ownership.

Managing everything in-house does not remove risk. It concentrates it.

Operational failure, security misconfiguration, poor scaling decisions, and under-maintained infrastructure are responsible for more outages than any vendor platform. Running infrastructure is not a badge of competence—it is a source of constant operational exposure.

Managed services shift risk away from individual teams and distribute it across platforms designed specifically to handle large-scale operations. When databases manage replication automatically, system design becomes more reliable by default. When queues manage retries and back-pressure, failure handling becomes consistent by design. When platforms manage uptime, disaster recovery stops being a project and becomes a property.

Serverless environments reduce the number of mistakes that teams are even capable of making. And that, more than anything, increases speed.

When fewer things can go wrong, teams move with more confidence.

Deployment Changes Behavior Before It Changes Technology

The most striking effect of serverless is not technical. It is psychological.

Teams that work on fragile systems deploy less frequently. Teams that release in large batches treat deployment as an exception. Teams that fear downtime associate change with risk.

Serverless environments encourage a very different behavior pattern. Because deployments are smaller, automated, and isolated, teams release more often. Rollbacks are easier. Failures are less catastrophic. The cost of experimentation drops.

Over time, this changes how organizations operate. Releasing becomes ordinary. Monitoring becomes continuous. Recovery becomes routine rather than reactive.

Speed emerges not because deployment is faster in seconds, but because teams are no longer afraid to deploy at all.

This distinction is subtle, but critical. Infrastructure influences psychology. Psychology influences delivery. Delivery determines whether a product survives in dynamic markets.

Elasticity Enables Strategy, Not Just Scalability

Scalability is often treated as a technical requirement. In reality, it is a strategic asset.

Traditional systems require growth forecasts. Capacity planning forces teams to predict the future. Budgeting forces them to commit early. Architecture decisions become bets on traffic patterns that may never arrive.

Serverless eliminates that pressure. Systems respond to demand in real time. Capacity expands automatically. Cost aligns with use rather than projection.

This allows organizations to operate differently. Teams can launch without perfect foresight. Markets can be tested without infrastructure commitments. Growth becomes a measured response instead of a leap of faith.

Elasticity does not just prevent outages. It reshapes business decision-making.

Common Failures in Serverless Adoption

Serverless does not automatically produce good systems. Poor design choices become visible faster under distributed environments.

Teams often make the mistake of turning functions into miniature monoliths. Logic grows unchecked. Responsibilities blur. Observability is neglected. Costs rise invisibly. Complexity spreads.

The result is infrastructure that scales technically but collapses operationally.

Good serverless systems require disciplined design: clear service boundaries, explicit error handling, and meaningful monitoring. Without these, teams merely replace one form of complexity with another.

Serverless is an amplifier. What it amplifies depends entirely on what you build.

Learning Speed Is the Real Measure of Velocity

The fastest teams are not the ones that deploy most often. They are the ones that learn most effectively from every release.

Serverless accelerates learning loops by making systems easier to observe. Logging is structured. Metrics are continuous. Failures are specific rather than systemic.

When systems explain themselves, improvement becomes actionable instead of speculative. Teams stop guessing and start responding.

Velocity is not about throughput. It is about feedback.

Serverless as an Organizational Enabler

Although serverless is discussed as an infrastructure decision, its real impact is organizational.

It reduces dependency chains between teams. It decentralizes ownership. It lowers the operational tax on development. It creates space for autonomy.

Teams move from maintaining systems to improving products.

This shift is subtle, but enduring.

How Zarego Helps Teams Build for Speed Without Sacrificing Control

At Zarego, we approach serverless as a design philosophy, not a technical shortcut.

Our goal is not to replace servers with functions. It is to build systems that change safely under pressure.

We help teams architect for evolution instead of prediction. We design systems that adapt without rewrites, scale without fear, and fail without catastrophe. We align infrastructure with strategy, not just performance targets.

Serverless, when done well, makes growth less dangerous.

And building safely at speed is not optional anymore. It’s the baseline.

If you’re building something that needs to grow without becoming brittle, let’s talk.